Three Perspectives on Deep Learning

“Deep learning has solved vision…” - Demis Hassabis (Google DeepMind)

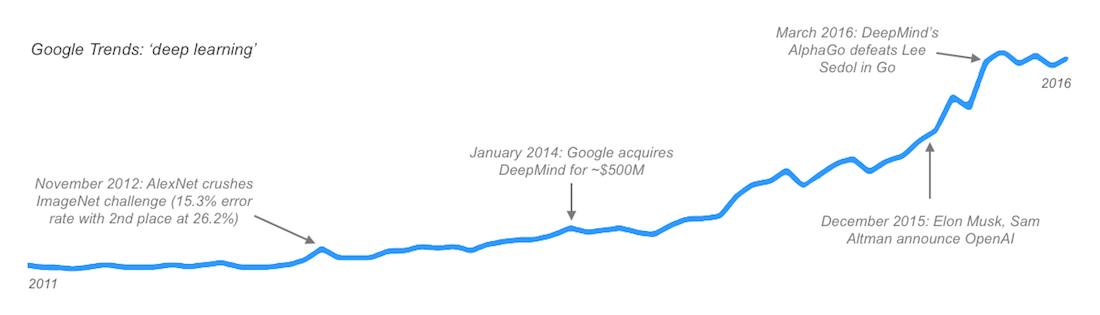

When Demis Hassabis opened his lecture at the 2015 CERN Data Science conference with this claim, I blinked in disbelief. Hundreds of brilliant scientists have spent their lives pondering the mysteries of the human eye. How could he make that claim so casually? Determined to prove him wrong, I looked at the latest computer vision research and… indeed, certain areas are at human performance.

In fact, deep learning can solve more than vision. It can defeat the world champion in Go, translate text, and paint like Van Gogh. It’s also been known to fly helicopters extremely well, fold protiens, and classify subatomic particles. Deep learning is awesome…but what exactly is it? After being obsessed with this field for more than a year, I should have a concise and satisfying answer. Strangely, I have three.

Biological picture

“A lot of the motivation for deep nets did come from looking at the brain…” - Geoffrey Hinton

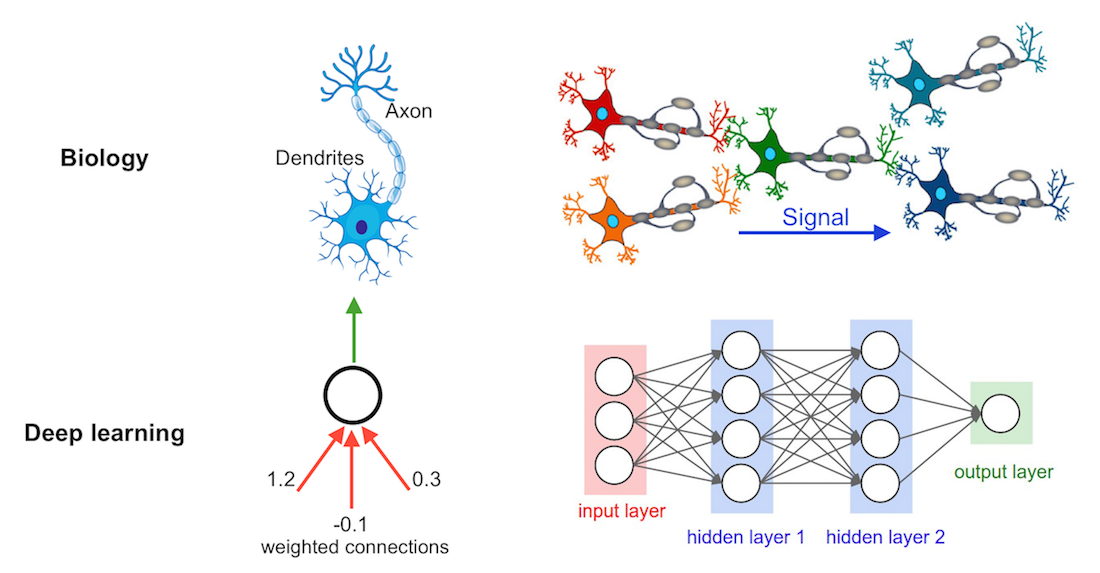

The field of deep learning got started when scientists tried to approximate certain circuits in the brain. For example, the Convolutional Neural Network (ConvNet) – a cornerstone of modern computer vision – was inspired by a paper about neurons in the monkey striate cortex. Another example is the field of Reinforcement Learning – the hottest area of AI right now - which was built on our understanding of how the brain processes rewards.

Skeptics claim that deep learning stretches the brain metaphor too far. Some of their critiques are:

- Scale: The largest neural nets have around 100 million ‘connections’. The human brain has over a 100 trillion connections. With faster GPUs and cluster computing, the size of deep learning models has increased by orders of magnitude in the past few years. This pattern will probably continue.

- Backprop: Some scientists believe that the backpropagation algorithm has no biological correlate. Others disagree.

- Architecture: Certain areas of the brain are able to store really complicated memories, perform symbolic operations, and build models of the outside world. These are things that state-of-the art deep learning cannot do. There’s been a push in research to change this.

Mathematical picture

“These adjustable parameters, often called weights, are real numbers that can be seen as ‘knobs’ that define the input–output function of the machine” - 2015 Nature Review

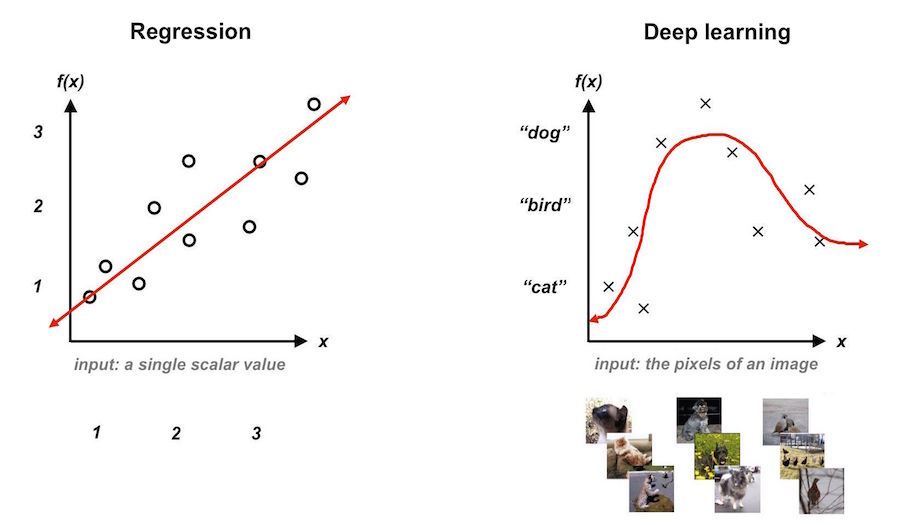

Mathematicians will tell you that deep learning is just complicated regression. Regression is the art of finding a function that explains the relationship between an input and an output. The simplest example is from middle school math when we used two points on a Cartesian plane to find the variables \(m\) and \(b\) in \(f(x)=mx+b\).

Deep learning is the same thing except \(f(x)\) can be arbitrarily complex and nonlinear. The input could be the pixels of an image and the outputs a caption which describes the image. The input could be a sentence of French and the output could be a sentence in English. Obviously, functions that perform these mappings must be extremely complicated. In fact, deep learning models often have millions of free parameters instead of just an \(m\) and a \(b\). That said, it’s just regression.

Computer science picture

“The cost of a world-class deep learning expert was about the same as a top NFL quarterback prospect” - Peter Lee (Microsoft Research)

The tech industry. Tech companies love to build hype around vague keyphrases such as ‘cloud’, ‘big data’, and ‘machine learning.’ Inevitably, ‘deep learning’ will join the list. There are really two approaches to deep learning in tech. The first says: this gets great results and makes us a profit – let’s pour a ton of money into it. The second says: this is the cure-all, next-big-thing, singularity-inducing pinnacle of computer science - let’s pour a ton of money into it. There are a few notable exceptions.

Edit, December 2020. I had previously linked to OpenAI as a ‘notable exception’, but in recent years OpenAI has been acting more like ClosedAI 😞. My new favorite ‘notable exception’ is the ML Collective, a renegade band of ML researchers who also happen to be friends. This group (of which I am a member) is a nonprofit aimed at advancing deep learning research while making it easier for people from non-traditional paths to get involved.

Research. Even in academia, researchers tend to prioritize results over understanding; deep learning theory lags woefully behind practice. If you do not have a background in computer science, you’ll get the best answers to the question “What can deep learning do?” and the worst answers to the question, “What is deep learning?” from people in tech.

Closing thoughts

“What I cannot create I do not understand” - Richard Feynman

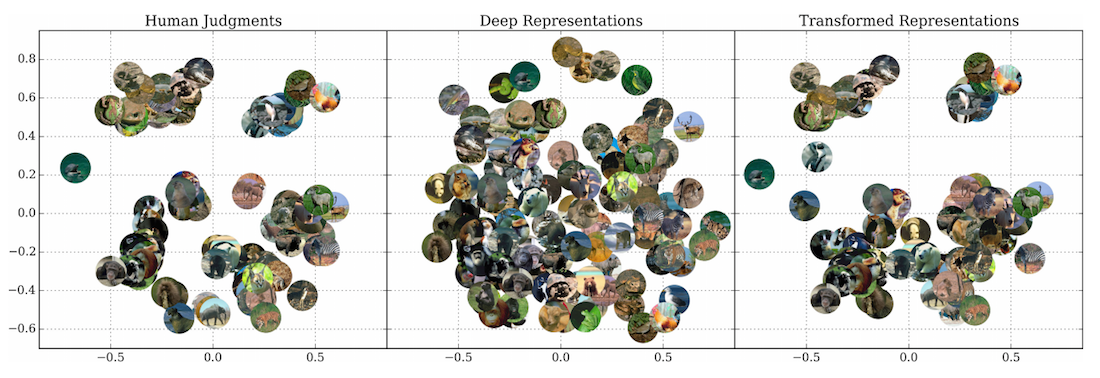

Deep learning lets us create some really exciting projects in the areas of computer vision, translation, and strategy. Richard Feynman once said, “What I cannot create I do not understand,” and this really plays to our advantage here. Now that we are able to create these projects, they can help us understand puzzles such as human vision, the structure of language, and decision making on an entirely new level.