Nearshore Physics and Automated Ship Wake Detection

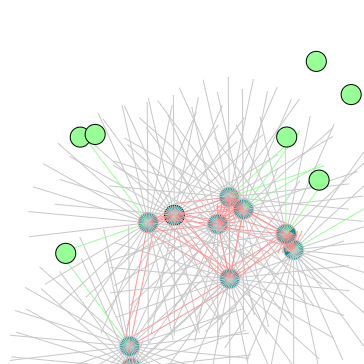

Salt water and fresh water have different densities and form layers. Special waves, called internal waves, exist between these layers. In this project, I tracked internal waves in time-lapse footage from the Columbia River Estuary.

Sam Greydanus, Robert Holman

AGU 2014 (Oral Presentation)