The Paths Perspective on Value Learning

I recently published a Distill article about value learning. This post includes a link to the article and some commentary on the Distill format.

Thoughts on Distill

I’ve admired Distill since its inception. Early on I could tell by the clean diagrams, interactive demos, and digestible prose that the authors knew a lot about their craft. On top of that, I was excited because Distill filled two important niches.

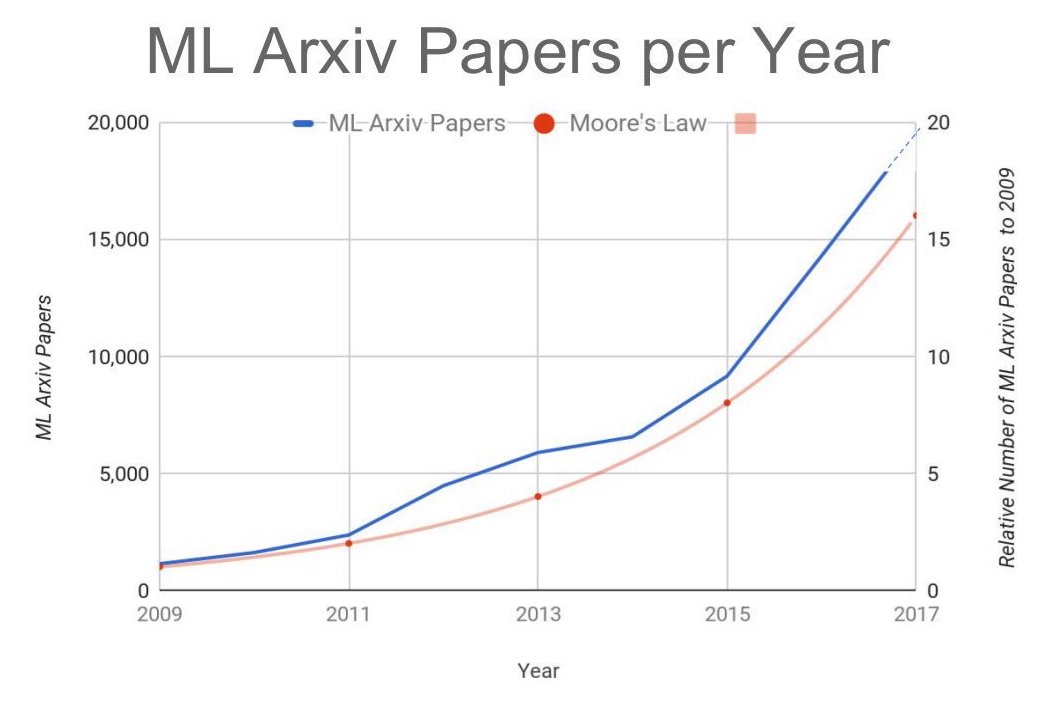

Niche 1: Repaying research debt. Our field has a rapid publication cycle and most researchers write more than one paper per year. The unintended consequence is that many of these papers are poorly written, quickly outdated, or even flat-out incorrect. Distill’s solution has been to collect the most important ideas and insights of machine learning in one place, without all the noise. In my experience this works well; I often learn as much from reading one Distill paper as I would from ten conference papers.

Niche 2: Highlighting qualitative results. These days, it can be difficult to publish a deep learning paper without a nice table showing that your approach achieves state-of-the-art results. These tables are certainly important, but a qualitative understanding of what the model is doing and why is just as important. Distill prioritizes these “science of deep learning” questions as Chris Olah told me, “because there is so much more to a neural network than its test loss.”

My experience with Distill

Writing a Distill article is Type 2 fun. It’s not easy and it’s not comfortable but it will make you a better researcher. My experience involved a lot of background research (re-reading Sutton’s RL textbook and watching David Silver’s lectures on YouTube) and a lot of work at a whiteboard. During the drafting process I had to delete everything and start from scratch a couple times. At times the process was frustrating and painful, but I sincerely believe that most of it was “growing pains” because I was pushing myself to create something really excellent.

Writing a Distill article is not an individual pursuit. I was fortunate enough to work with Chris Olah and others from the editorial team. The editors have high standards but they make a sincere effort to get new people involved. They spent a lot of time teaching me the skills and thought processes I needed in order to make the article shine.

Advice. My main advice is that writing a Distill article is not like writing a conference paper. If you approach it with the same expectations, you will be sad. If you approach it with different expectations, you will be happy. A few key differences:

- Audience. Your audience is no longer your reviewers plus a smattering of people who study what you study. Now your audience is anyone who cares about ML research. When I write a conference paper, I imagine I'm explaining my results to one of my professors. When I write a Distill article, I imagine I'm explaining my results to a smart undergraduate.

- The story you tell. Many conference papers target a shortcoming of previous work and propose a better solution. Distill articles read more like natural science articles: the author collects data, analyzes it, and then reports what they found. A good example is Activation Atlases where the authors observe the features of a vision model, hypothesize about what they are doing, and then verify the hypothesis. Some articles such as How to Use T-SNE Effectively don't even report new knowledge and instead focus on explaining a known concept really well.

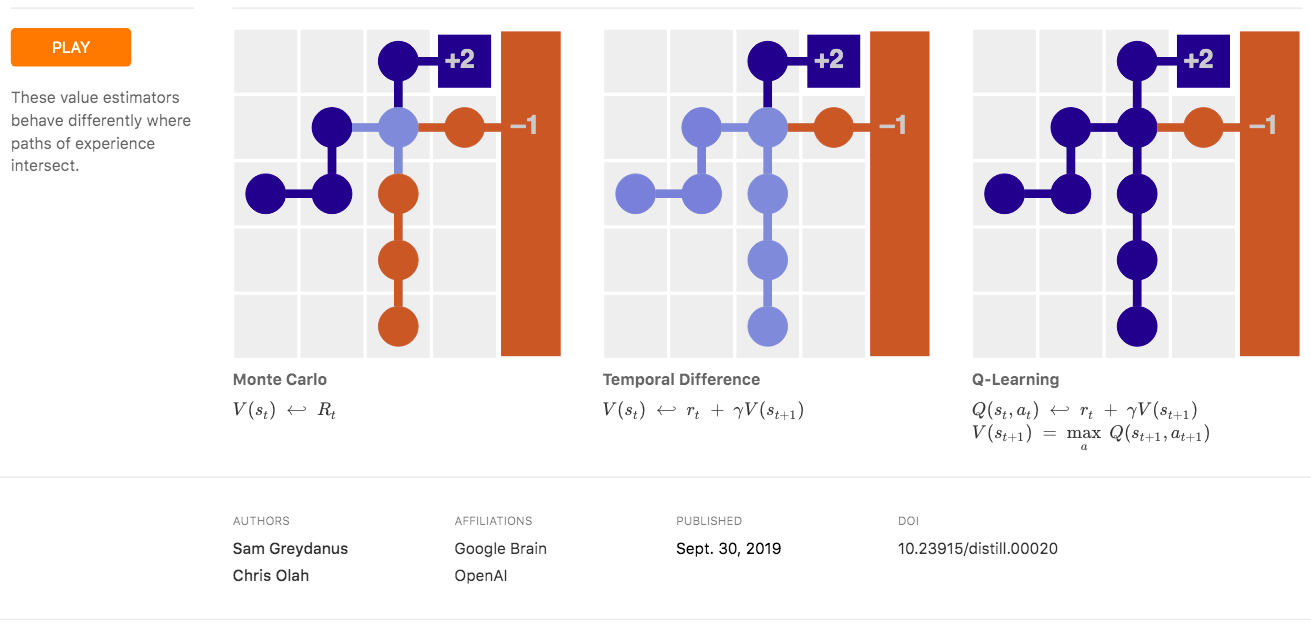

- Diagrams and demos. Distill puts a premium on clarity. This doesn't mean that you need to have fancy diagrams or demos in your article (some strong Distill articles do not), but if they are the best way to explain something, then use them! I got a lot of satisfaction from making my diagrams in Illustrator, coding my demos in JavaScript, and putting lots of time and thought into making things beautiful.

- Time to submission. From the initial idea to the final draft, you'll need about twice as much time as you'd need for a conference paper.

- Time of review process. Plan on the review process also taking about twice as long.

- Sense of wonder. The very best modes of science communication have a way of inspiring wonder and excitement in their audience. Distill is no exception. Its articles help young researchers see the beauty and promise of the field in a way that conference papers, textbooks, and boring lectures cannot. An implicit part of writing a Distill article involves channeling your "sense of wonder" so that your readers can experience it too.

Takeaway. I had a good experience working with Distill and would recommend it to others. I’m happy to answer specific questions about the process over email.