-

Mar 30, 2025 The Cursive Transformer

Mar 30, 2025 The Cursive Transformer

We train a small GPT-style Transformer model to generate cursive handwriting. The trick to making this work is a custom tokenizer for pen strokes. -

Mar 12, 2023 Six Experiments in Action Minimization

Mar 12, 2023 Six Experiments in Action Minimization

Using action minimization, we obtain dynamics for six different physical systems including a double pendulum and a gas with a Lennard-Jones potential. -

Mar 5, 2023 Finding Paths of Least Action with Gradient Descent

Mar 5, 2023 Finding Paths of Least Action with Gradient Descent

The purpose of this simple post is to bring to attention a view of physics which isn’t often communicated in intro courses: the view of physics as optimization. -

May 24, 2022 Studying Growth with Neural Cellular Automata

May 24, 2022 Studying Growth with Neural Cellular Automata

We train simulated cells to grow into organisms by communicating with their neighbors. Then we use them to study patterns of growth found in nature. -

May 8, 2022 A Structural Optimization Tutorial

May 8, 2022 A Structural Optimization Tutorial

Structural optimization lets us design trusses, bridges, and buildings starting from the physics of elastic materials. Let's code it up, from scratch, in 180 lines. -

Mar 27, 2022 How Simulating the Universe Could Yield Quantum Mechanics

Mar 27, 2022 How Simulating the Universe Could Yield Quantum Mechanics

We look at the logistics of simulating the universe. We find that enforcing conservation laws, isotropy, etc. in parallel could lead to quantum-like effects. -

Jan 25, 2022 Dissipative Hamiltonian Neural Networks

Jan 25, 2022 Dissipative Hamiltonian Neural Networks

This class of models can learn Hamiltonians from data even when the total energy of the system is not perfectly conserved. -

Jun 11, 2021 Piecewise-constant Neural ODEs

Jun 11, 2021 Piecewise-constant Neural ODEs

We propose a timeseries model that can be integrated adaptively. It jumps over simulation steps that are predictable and spends more time on those that are not. -

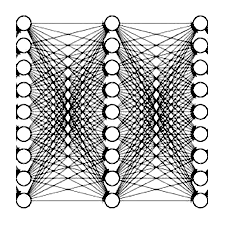

Dec 1, 2020 Scaling down Deep Learning

Dec 1, 2020 Scaling down Deep Learning

In order to explore the limits of how large we can scale neural networks, we may need to explore the limits of how small we can scale them first. -

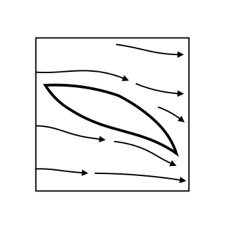

Oct 14, 2020 Optimizing a Wing Inside a Fluid Simulation

Oct 14, 2020 Optimizing a Wing Inside a Fluid Simulation

How does physics shape flight? To show how fundamental wings are, I derive one from scratch by differentiating through a wind tunnel simulation. -

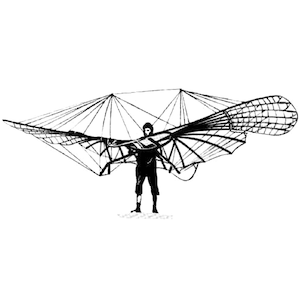

Oct 13, 2020 The Stepping Stones of Flight

Oct 13, 2020 The Stepping Stones of Flight

How did flight become a reality? Let's look at the inventors who took flight from the world of ideas to the world of things – focusing in particular on airfoil design. -

Oct 12, 2020 The Story of Flight

Oct 12, 2020 The Story of Flight

Why do humans want to fly? Let's start by looking at the humans for whom the desire to fly was strongest: the early aviators. -

Aug 27, 2020 Self-classifying MNIST Digits

Aug 27, 2020 Self-classifying MNIST Digits

We treat every pixel in an image as a biological cell. We train these cells to signal to one another and determine what digit they are shaping. -

Mar 10, 2020 Lagrangian Neural Networks

Mar 10, 2020 Lagrangian Neural Networks

As a complement to Hamiltonian Neural Networks, I discuss how to parameterize Lagrangians with neural networks and then learn them from data. -

Jan 27, 2020 The Paths Perspective on Value Learning

Jan 27, 2020 The Paths Perspective on Value Learning

I recently published a Distill article about value learning. This post includes a link to the article and some commentary on the Distill format. -

Dec 15, 2019 Neural Reparameterization Improves Structural Optimization

Dec 15, 2019 Neural Reparameterization Improves Structural Optimization

We use neural networks to reparameterize structural optimization, building better bridges, skyscrapers, and cantilevers while enforcing hard physical constraints. -

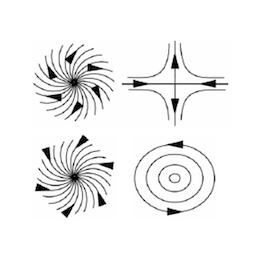

May 15, 2019 Hamiltonian Neural Networks

May 15, 2019 Hamiltonian Neural Networks

Instead of crafting Hamiltonians by hand, we propose parameterizing them with neural networks and then learning them directly from data. -

Nov 1, 2017 Visualizing and Understanding Atari Agents

Nov 1, 2017 Visualizing and Understanding Atari Agents

Deep RL agents are good at maximizing rewards but it's often unclear what strategies they use to do so. I'll talk about a paper I wrote to solve this problem. -

Oct 30, 2017 Training Networks in Random Subspaces

Oct 30, 2017 Training Networks in Random Subspaces

Do we really need over 100,000 free parameters to build a good MNIST classifier? It turns out that we can eliminate 80-90% of them. -

Jul 28, 2017 Taming Wave Functions with Neural Networks

Jul 28, 2017 Taming Wave Functions with Neural Networks

The wave function is essential to most calculations in quantum mechanics but it's a difficult beast to tame. Can neural networks help? -

Feb 27, 2017 Differentiable Memory and the Brain

Feb 27, 2017 Differentiable Memory and the Brain

We compare the Differentiable Neural Computer, a strong neural memory model, to human memory and discuss where the analogy breaks down. -

Jan 7, 2017 Learning the Enigma with Recurrent Neural Networks

Jan 7, 2017 Learning the Enigma with Recurrent Neural Networks

Recurrent Neural Networks are Turing-complete and can approximate any function. As a tip of the hat to Alan Turing, let's approximate the Enigma cipher. -

Nov 26, 2016 A Bird's Eye View of Synthetic Gradients

Nov 26, 2016 A Bird's Eye View of Synthetic Gradients

Synthetic gradients achieve the perfect balance of crazy and brilliant. In a 100-line Gist I'll introduce this exotic technique and use it to train a neural network. -

Sep 5, 2016 The Art of Regularization

Sep 5, 2016 The Art of Regularization

Regularization has a huge impact on deep models. Let's visualize the effects of various techniques on a neural network trained on MNIST. -

Aug 21, 2016 Scribe: Generating Realistic Handwriting with TensorFlow

Aug 21, 2016 Scribe: Generating Realistic Handwriting with TensorFlow

Let's use a deep learning model to generate human-like handwriting. This work is based on Generating Sequences With Recurrent Neural Networks by Alex Graves -

Aug 5, 2016 Three Perspectives on Deep Learning

Aug 5, 2016 Three Perspectives on Deep Learning

After being excited about this field for more than a year, I should have a concise and satisfying answer to the question, 'What is deep learning?' But I have three.